Difference between revisions of "CarpetX"

(→Multipole/qc0) |

|||

| Line 75: | Line 75: | ||

* Turning on tiling causes significant slowdown across the board. Sync statements are of the same order (if slightly slower) than Carpet without tiling. With tiling, these are 1-2 orders of magnitude slower. All functions are similarly slowed, which means that tiling is definitely not implemented well currently. We also watched the simulation via top while it ran using 4 processors with 10 threads. Each processor uses 6GB. However, this amount increases to ~12GB during the ODESolver phase, and then immediately returns to 6GB after ODESolver finishes. This might also be part of the reason that CarpetX is so much slower. | * Turning on tiling causes significant slowdown across the board. Sync statements are of the same order (if slightly slower) than Carpet without tiling. With tiling, these are 1-2 orders of magnitude slower. All functions are similarly slowed, which means that tiling is definitely not implemented well currently. We also watched the simulation via top while it ran using 4 processors with 10 threads. Each processor uses 6GB. However, this amount increases to ~12GB during the ODESolver phase, and then immediately returns to 6GB after ODESolver finishes. This might also be part of the reason that CarpetX is so much slower. | ||

| + | |||

| + | == Synchronization Speed == | ||

| + | * Added thorn SyncTestX to facilitate comparisons of syncs in CarpetX and Carpet | ||

| + | * Parfiles are included for simulations using both drivers that generate the same mesh | ||

| + | * Syncs were timed by placing timers in SyncGroupsByDirI (CarpetX) and SyncGroups (Carpet) | ||

| + | * CarpetX is ~2 orders of magnitude slower | ||

| + | |||

| + | [[File:Synchronization Timing Comparison.png|thumb|Comparison of synchronizations between CarpetX (with tiling) and Carpet. Carpet shows similar times for synchronization regardless of processor count for this mesh, but CarpetX improves significantly with higher processor count. However, CarpetX is slower by 2-3 orders of magnitude (0.0004 vs 0.02 in the case of 40 processors).]] | ||

== Open issues/bugs == | == Open issues/bugs == | ||

Revision as of 17:03, 6 October 2021

The folllowing is the work done by S. Cupp on the CarpetX framework.

Contents

CarpetX Interpolator

- Added the interface to connect Cactus' existing interpolation system to the CarpetX interpolator

- AHFinder interpolation test was extended to compare the results from directly calling CarpetX's interpolator with the results from calling the new interface.

CarpetX Arrays

- Added support for distrib=const arrays in CarpetX

- Combined the structures for arrays and scalars into a single struct

- All scalar code was extended to be able to handle both scalars and arrays

- Added test in TestArray thorn to verify that array data is allocated correctly and behaves as expected when accessed

CarpetX DynamicData

- Overloaded the DynamicData function for use with CarpetX

- Added the necessary data storage in the array group data to provide the dynamic data when requested

- Added test in TestArray thorn to verify that dynamic data for grid functions, scalars, and arrays returns the correct data

- Test also serves as basic test for read/write declarations, as all three variable types are written and read in this test

Multipole/qc0

- Multipole incorporated into cactusamrex repository

- Test produces data that matches old code (be sure to use the same interpolator)

- The qc0 simulation is quite slow, but has been improved by switching to WeylScal4. Still, The Toolkit paper needs a simulation time of ~300M to get the full merger. We need to reach a simulation speed that makes this viable before we can compare to previous simulations. We can simulate for ~200M, but it takes an entire week on the 40-core machine Melete. During testing, Weyl was replaced with WeylScal4 because the compiler was failing to optimize Weyl's code, resulting in significantly longer runtimes. This can be seen in the data from simulations on Spine. Tiling has some minor slowdown, as tiling only provides benefits with openmp, which isn't active in these runs. In addition, some areas of the code likely still use explicit loops, which will result in multiple calculations of the same data with tiling.

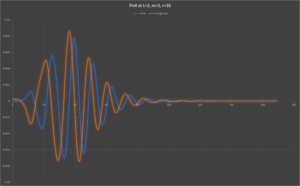

- A 200M simulation ran on Melete. Plots of the data show a well-behaved waveform.

| Simulation | Simulation Time | CCTK_INITIAL Time | CCTK_EVOL Time | CCTK_ANALYSIS Time |

|---|---|---|---|---|

| Weyl without Tiling | 25892.98 | 1929.70 | 12409.22 | 7577.40 |

| WeylScal4 without Tiling | 19193.38 | 1945.42 | 13275.02 | 668.74 |

| No Weyl without Tiling | 18077.91 | 1936.15 | 12573.32 | 578.31 |

| No Weyl with Tiling | 20105.39 | 1953.53 | 13959.25 | 643.85 |

| Simulation | Simulation Time | CCTK_INITIAL Time | CCTK_EVOL Time | CCTK_ANALYSIS Time |

|---|---|---|---|---|

| WeylScal4 (40 procs) | 2069.37 | 290.12 | 1280.10 | 109.41 |

| WeylScal4 (20 procs) | 3293.13 | 496.75 | 1982.01 | 185.66 |

| WeylScal4 (8 procs) | 6791.85 | 1009.68 | 4106.06 | 399.76 |

| Weyl (8 procs) | 11348.08 | 986.38 | 4151.04 | 4199.59 |

| No Weyl (8 procs) | 6718.82 | 994.97 | 4149.12 | 308.99 |

- To track down the primary source of slowdown, several different simulations were run with 40 processors on Melete. These only ran for 3 iterations. To further simplify the simulation, TwoPunctures, Multipole, and WeylScal4 were removed. The "Minkowski" initial data from ADMBase is used.

| Simulation | Iteration 0 Time | Iteration 1 Time | Iteration 2 Time | Iteration 3 Time |

|---|---|---|---|---|

| Full Simulation | 25 | 57 (32) | 85 (28) | 113 (28) |

| Zero RHS | 23 | 47 (24) | 68 (21) | 88 (20) |

| "constant" ODESolver | 25 | 37 (12) | 46 (9) | 55 (9) |

| Both reductions | 25 | 35 (10) | 42 (7) | 49 (7) |

- Turning on tiling causes significant slowdown across the board. Sync statements are of the same order (if slightly slower) than Carpet without tiling. With tiling, these are 1-2 orders of magnitude slower. All functions are similarly slowed, which means that tiling is definitely not implemented well currently. We also watched the simulation via top while it ran using 4 processors with 10 threads. Each processor uses 6GB. However, this amount increases to ~12GB during the ODESolver phase, and then immediately returns to 6GB after ODESolver finishes. This might also be part of the reason that CarpetX is so much slower.

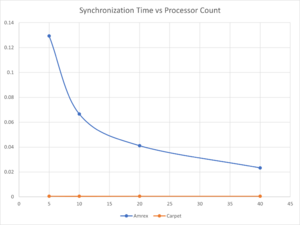

Synchronization Speed

- Added thorn SyncTestX to facilitate comparisons of syncs in CarpetX and Carpet

- Parfiles are included for simulations using both drivers that generate the same mesh

- Syncs were timed by placing timers in SyncGroupsByDirI (CarpetX) and SyncGroups (Carpet)

- CarpetX is ~2 orders of magnitude slower

Comparison of synchronizations between CarpetX (with tiling) and Carpet. Carpet shows similar times for synchronization regardless of processor count for this mesh, but CarpetX improves significantly with higher processor count. However, CarpetX is slower by 2-3 orders of magnitude (0.0004 vs 0.02 in the case of 40 processors).

Open issues/bugs

- storage being always-on in CarpetX results in attempted regridding,etc. that causes validity failures. This is caused by the expected number of time levels not matching the actual number of time levels.

- If there are too few cells, an unclear error appears which boils down to "assertion 'bc=bcrec' fails". This is because the cells are so large w.r.t. the number of points that multiple physical boundaries are within one cell. Identifying this as the problem and generating a different error message is recommended. Alternatively, the code could be altered to allow for these kinds of runs to function, though I am not sure that this would be worthwhile if it takes much effort/time.

- CarpetX seems to use substantial resources for the qc0 test, and we are unsure of why. The amount of memory is several times higher than Roland's estimates for the usage with the given grid size. Eric has stated that this is due to the compiler failing to optimize the Weyl code.

- The validity checking is incorrect for periodic boundary conditions. These boundary conditions are handled by AMReX, so no boundary conditions are run on the level of Cactus. Because it is all internal to AMReX, CarpetX never sets the boundaries to be valid. To fix this, any time the ghost zones are set to valid, the boundaries should also be set to valid. This should only be done when periodic boundary conditions are being used. Once this bug is fixed, the Weyl schedule.ccl should be reviewed as it contains hacks to bypass this bug.

- A strange error occurred while working on the gauge wave test. The error was triggered by assert(bc == bcrec) in the prolongate_3d_rf2 function of CarpetX/src/prolongate_3d_rf2.cxx. We determined that having --oversubscribe triggers this error, but it is unclear why that would happen. It does not occur when using the TwoPunctures initial data and only started after switching to the gauge wave initial data. It also causes a significant slowdown and uses tons of memory. Roland thinks that this issue could be caused by an error estimator for regridding, but that doesn't explain why the assert is triggering.

Open tasks/improvements

- SymmetryInterpolate isn't hooked up yet, but commented out code provides a starting point

- Currently, CarpetX and Cactus both have parameters for interpolation order. As an example, qc0.par has to set "CarpetX::interpolation_order = 3" and "Multipole::interpolator_pars = "order=3" ". These should be condensed into a single parameter. Since individual thorns are setting their own interpolation order, I am assuming that different orders can be chosen for different thorns. If different variables have different orders of interpolation, the current implementation would break. Instead of using its own parameter, CarpetX should work with the existing infrastructure.

- CarpetX's interpolate function doesn't return error codes, but historically there have been error codes for the interpolator. The new interpolate function should incorporate the old error codes to fully reproduce the functionality of the old interpolator

- Interpolation interface should print out error codes for TableGetIntArray()

- Distributed arrays are still not supported. It is unclear where these are used (at least to me). However, if they are needed, CarpetX will need to be extended to support them.

- The DynamicData test revealed a bug with the scalar validity code. As such, we should consider whether we need more tests which specifically test the functionality of the valid/invalid code for the various types of variables. For example, tests for validating the poison routine, NaN checker, etc.

Closed issues/bugs

- R. Haas resolved the issue with how CarpetX handled the difference between cell- and vertex-centered grid functions from old thorns. Incorrect default settings caused issues with interpolation, looping, etc. For example, TwoPunctures and Multipole required a hack where looping needed cctk_lsh[#]+1 instead of cctk_lsh[#]. Now that CarpetX/Cactus properly handles this data, old thorns should not need hacks to properly loop over grid functions.