Improved scheduling

Some months ago, we discussed possible ways to make scheduling in Cactus more straightforward for thorn writers. Please see Adding requirements to the Cactus scheduler for the work that was done before. We then had a telecon on 24-Oct-2011 (minutes?) in which we did some brainstorming and introduced the ideas to some new people.

Interested parties: Ian, Erik, Barry, Steve, Alexander, Peter, Oleg, Bruno M, Christian R, Frank

Contents

Current status

- There is a branch of the Cactus flesh which supports REQUIRES and PROVIDES directives in schedule.ccl files.

- There is a cut-down WaveToy thorn with very simple schedule requirements.

Possible plan

(including long-term vision. Please discuss!)

- Change REQUIRES and PROVIDES to READS and WRITES - we seem to have consensus that this makes more sense

- Define a semantics for accessing only parts of the domain, e.g. boundaries, symmetries. What domain parts should be supported? Should sync, prolongation, buffer zone prolongation, symmetries be applied automatically? Do we then just have interior, boundary, and everything? If so, note that interior | boundary != everything since we don't have an explicit notion for ghosts and buffer zones. Should there, in addition to a CCL syntax, be an API for declaring things at run-time? If so, should this API be more extensive than the CCL syntax? Probably best to write down, on a white board, the schedule for a parallel unigrid WaveToy with MoL.

- Set up a sequence of functions in CCTK_INITIAL with dependency information between variables. Make Carpet decide the scheduling order within CCTK_INITIAL based on these. We will do this by having Carpet call a function in an external thorn or the flesh which tells it what functions need to be run to update certain variables (see later).

- Get evolution working

- Get boundary conditions working

- Make everything work for unigrid

- Make Carpet handle regridding/restricting/syncing/prolongating of all variables by calling scheduled functions which say they can write these variables.

- Add READS and WRITES information to all scheduled functions in the Einstein Toolkit - obviously this will be a large task and should be done bit-by-bit

- Check that the schedule that is derived is the same as before (except where it was wrong or ambiguous before - Frank mentioned that he once tried to change Cactus to schedule functions in the reverse order where it is currently ambiguous, and this led to errors, so there are probably some thorns which do not correctly specify orderings).

- Have both data-driven and current systems available at the same time. We probably will need elements of both in the end, and this makes compatibility much easier to handle. We can slowly transition different parts of the schedule to the new system where possible. We can check the two systems against each other as well.

Issues/thoughts

- Think about how to deal with MoL. Should we first try to get evolution working without MoL?

- We almost certainly will need to have some overlap between the old scheduler and the new scheduler. For this it probably makes sense to allow (and ignore) the new keywords in the flesh even in trunk so that we don't need a separate branch for every thorn that we add the keywords to. In Carpet, we could have some parameters to control the scheduler - i.e. to use the old one or the new one.

- What shall we do with PUGH?

- Do thorns need to declare dependencies on all Cactus variables that they access? e.g. grid scalars, grid arrays, spherical surfaces etc? I think yes. What about flesh variables: cctk_nghostzones etc? I think no.

- Is it too much effort for a thorn writer to declare all the dependencies in the schedule.ccl file?

- We cannot derive the dependencies automatically from the source file. For strict checking, we could make variables which we have not asked for either null pointers or not available at the compiler level - I prefer the latter but the former would be easier to implement first.

- Eventually we can check writes as well as reads by doing checksumming of data after a function is called. This is mostly important for checking that different regions of the grid have been written according to the scheduling.

- Idea: can we eventually eliminate all bins and groups and "before/after" directives, and drive the scheduling entirely based on data dependency? Would need to have "contexts" for variables. e.g. saying that a function writes gxx_initial, and MoL then reads gxx_initial and writes gxx.

- Identifying different parts of the grid: inner and outer ghost points - cf CaKernel

- Do we want to have dependencies on functions as well as data? Does this make some things simpler?

- How can we "insert" functions into "pipelines". e.g. adding noise or adding some other perturbation, which might not be aware of each other. How to resolve the order of these? The user is the only one who knows what order they should be run in. This has to be provided in the parameter file.

- Should some functions just be marked as "filters"?

- Can we say that we only need e.g. the outermost point of the grid to be synced because our particular algorithm can fill in some of the ghost points? (optimisation)

- Automatically determine number of ghost/boundary points

- Standard method for application thorns to do looping over different parts of the grid

- Can the schedule change at runtime? When would be a good time to schedule it? Same time that recovery can happen - important to consider interaction with Berger-Oliger timestepping.

- Checksumming won't work for determining if somebody has written data back the same as was there. Maybe invalidate data first (poison). What if you read and write the same variable? Then we just don't have any way to check correctness and have to assume the code is correct.

- Can we have dependencies on parameters? e.g. if a parameter is steered, some other functions might need to be rerun to update things (e.g. regridding controlled by parameter). Don't want to list all the parameters which are used as input for a function.

- How do we say which spherical surface we write, when this is determined at run-time from a parameter? Can we put parameters into these WRITES statements? e.g. WRITES: sphericalfurface::sf_centroid_x[ah_sf] where ah_sf is a parameter?

- Completely separate idea: Named spherical surfaces

- Christian introduced SphericalSlice

- functionattribute structure - private flesh structure containing scheduled function information

- requirements.cc already committed to Carpet - depends on an ifdef. does some checking.

- We will write a new thorn, whose functionality might eventually go into the flesh or Carpet. This will call a new flesh API to get information about scheduled functions, including the READS and WRITES sections. This thorn will then convert this information into a dependency tree. The thorn will provide a function which takes a list of (parts of) variables which must be updated, and then returns (or calls a callback function on) the required scheduled functions to evaluate those variables.

- Everything which is specified by data dependencies should be ensured. This will leave ambiguities - for example, when should "filter" functions be called? Probably the simplest way to do this is to use BEFORE and AFTER. In the example of adding noise to initial data, you have an initial data thorn which WRITES gxx, and another thorn (MoL) which READS gxx (for its RHS). If we have a filter function which adds noise to gxx - it READS and WRITES gxx. Does this get called before or after MoL? In the current thinking, we would say that the Noise function is called BEFORE MoL. This is possible because the author of Noise knows that MoL exists. There will be situations where the two thorns don't know about each other, and in that case the only person who can resolve this is the end-user in the parameter file. We need a way to specify these orderings. Suppose we have two filters: Noise and Perturb. Both of these are scheduled BEFORE MoL, and READ and WRITE gxx. We could have a flesh string parameter called

dependencies = Noise < Perturb, <fn2> < <fn3>, ...

where "<" means "before". This provides an ordering on scheduled functions. This is one way to solve this problem - there probably are other ways.

Work plan

What would we like to achieve during this workshop? Just discussion, or also some coding?

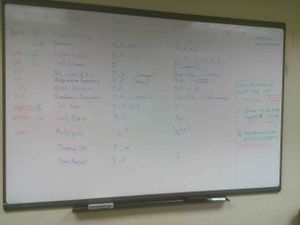

SCHEDULING

Problems

* Complicated to get it to work with mesh refinement

* Verbose, you have to type a lot of things

Aim:

* User should just specify what function sets/does

* Add information to the schedule CCL file to say

what variables are read/written

* Fewer bins

* Look at what DAGMan does

* Look at Uintah http://www.sci.utah.edu/research/computing/271-uintah.html

* Create variables or modify variables

* If you have a hybrid,

* variable read/write provides a check

* can determine sync/prolong

* automatic memory allocation/freeing

BUILD SYSTEM

* Top two priorities:

1) Python front end

2) Understand what the current system does

* Grammars for CCL

* enables other programs (e.g. Kranc) to access contents

* makes things less ambiguous, more professional

* Document CACTUS_CONFIGS_DIR

* Should there be an ARRANGEMENTS_DIR or ARRANGEMENTS_PATH?

* Can the build system be simpler?

* Python front-end: Ticket 332 on Cactus Makefile replacement

* Do this first becaues it would be easy

* Generate master make

* Alternatives to Make?

* ant - Alexander will study

* cmake

* maven - Has a C/C++ plugin

* Progress indicator?

* Cactus test suite interface

* list test suites

* run just one test

* Need non-interactive test suite mode

* Ian believes this shouldn't just be in simfactory

* Maybe a lot of this could be fixed with better documentation

* Probably no one understands all of it

* Heavily tied into autotools

Code

Flesh

New function GetScheduledFunctionData returns a list of structures with the schedule information of each scheduled function. Do we also want a GetScheduledGroupData for schedule groups? Do we still need schedule groups?

DataDrivenScheduler

Provides functions: GetDependentFunctions(vars) -> DAG of functions which should be traversed in order to compute all the variables in vars. This calls GetScheduledFunctionData. vars will have to be a list of objects which indicate both the variable and the part of the grid. Need a representation of the different parts of the grid - maybe we want to OR these together. Carpet will be calling this function. Might want to specify the Cactus timebin that we are interested in for this as well.

varspec is {varnum, gridpart}

gridpart is an OR of Interior, PhysicalBoundary, SymmetryBoundary, InterprocessorBoundary, RefinementBoundary - or whatever we decided were the disjoint and covering parts of the grid. We could then combine some by defining Everything = Interior | PhysicalBoundary | ... | RefinementBoundary etc. These identifiers could be defined in the flesh at some point: CCTK_Interior, CCTK_PhysicalBoundary etc, and could be used in future in functions for looping over parts of the grid.

In summary: GetDependentFunctions({{varnum, gridpart}, ...}, bin) ->